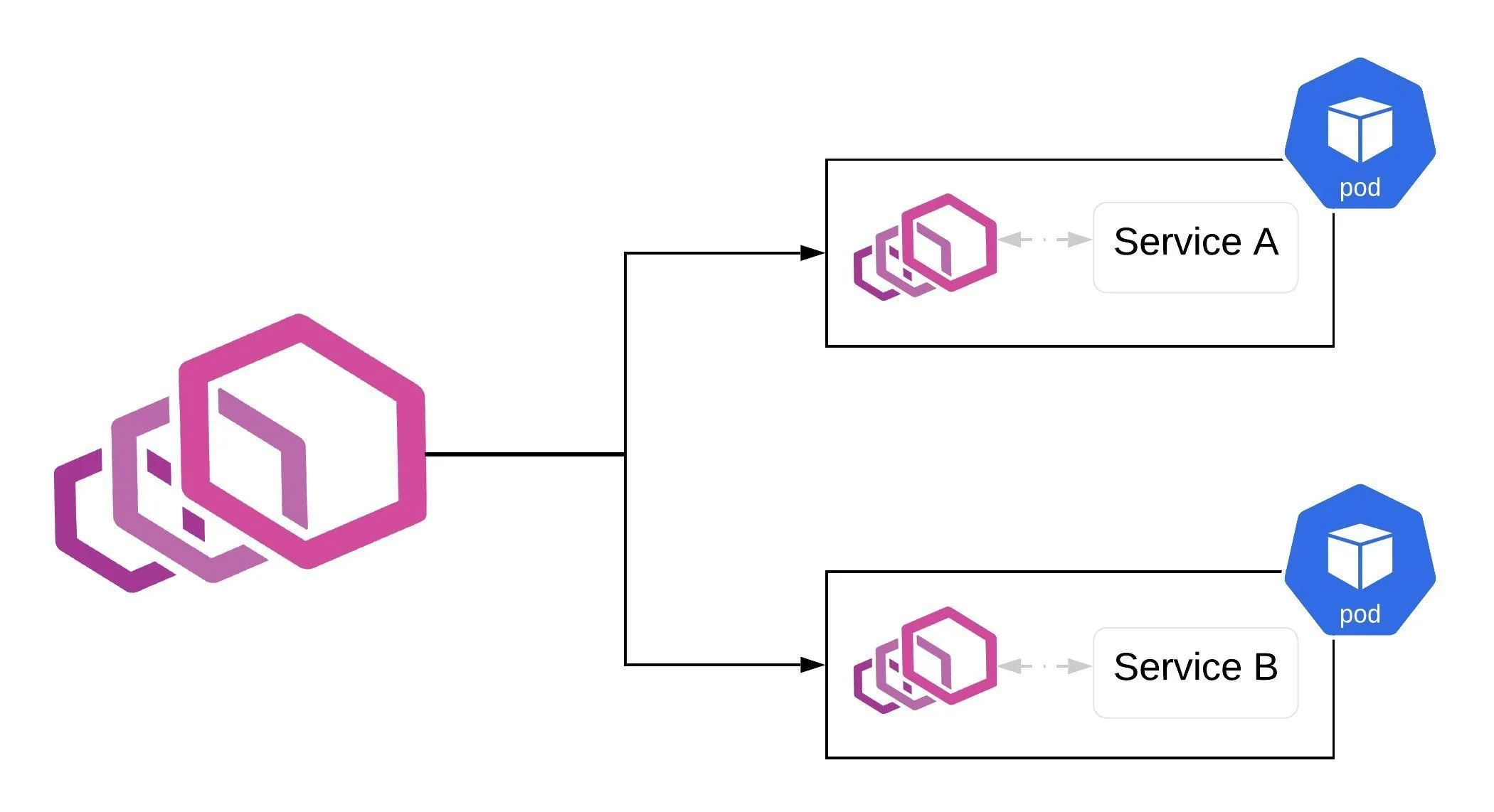

This post will cover a demo working setup of a service mesh architecture using Envoy using a demo application. In this service mesh architecture, we will be using Envoy proxy for both control and data plane. The setup is deployed in a Kubernetes cluster using Amazon EKS.

Pre-requisites

We will be deploying an echo-grpc test application provided by Google in their article related to gRPC load balancing and was used as a reference to test the service mesh setup with Envoy. The article covers setting up Envoy as an edge proxy only. This is a simple gRPC application that exposes a unary method that takes a string in the content request field and responds with the content unaltered. Repo: grpc-gke-nlb-tutorial

- Clone this repo.

- Go to the echo-grpc directory.

- Using the Dockerfile provided in the folder, we would have to build the image and push it to the Docker registry of choice. Since we are not using GCP, Docker Hub is used as the registry.

- Run docker login and login with your hub credentials.

- Build the image docker build -t echo-grpc .

- Tag the image docker tag echo-grpc /echo-grpc

- Push the image docker push /echo-grpc

- Create a separate folder to put all the YAML files.

- Create namespace in k8s:

kubectl create namespace envoy - Install grpcurl tool which is similar to curl but for gRPC for testing:

go get github.com/fullstorydev/grpcurl

Sidecar Deployment

Configuration of envoy for the sidecar deployment:

envoy-echo.yaml:

1apiVersion: v1

2kind: ConfigMap

3metadata:

4 name: envoy-echo

5data:

6 envoy.yaml: |

7 static_resources:

8 listeners:

9 - address:

10 socket_address:

11 address: 0.0.0.0

12 port_value: 8786

13 filter_chains:

14 - filters:

15 - name: envoy.http_connection_manager

16 config:

17 access_log:

18 - name: envoy.file_access_log

19 config:

20 path: "/dev/stdout"

21 codec_type: AUTO

22 stat_prefix: ingress_https

23 route_config:

24 name: local_route

25 virtual_hosts:

26 - name: https

27 domains:

28 - "*"

29 routes:

30 - match:

31 prefix: "/api.Echo/"

32 route:

33 cluster: echo-grpc

34 http_filters:

35 - name: envoy.health_check

36 config:

37 pass_through_mode: false

38 headers:

39 - name: ":path"

40 exact_match: "/healthz"

41 - name: "x-envoy-livenessprobe"

42 exact_match: "healthz"

43 - name: envoy.router

44 config: {}

45 clusters:

46 - name: echo-grpc

47 connect_timeout: 0.5s

48 type: STATIC

49 lb_policy: ROUND_ROBIN

50 http2_protocol_options: {}

51 load_assignment:

52 cluster_name: echo-grpc

53 endpoints:

54 - lb_endpoints:

55 - endpoint:

56 address:

57 socket_address:

58 address: "127.0.0.1"

59 port_value: 8081

60 health_checks:

61 timeout: 1s

62 interval: 10s

63 unhealthy_threshold: 2

64 healthy_threshold: 2

65 grpc_health_check: {}

66 admin:

67 access_log_path: "/dev/stdout"

68 address:

69 socket_address:

70 address: 127.0.0.1

71 port_value: 8090A couple things to note here.

- We are exposing sidecar on 8786 port on the container.

- Filter envoy.http_connection_manager handles the HTTP traffic.

- route_config is used to define the routes for each domain to their respective clusters. Here we are keeping the domain as

*, allowing all domains to pass-through. - A cluster is envoy defines the services that will be called based on the route.

- In the cluster, the lb_policy defines the algorithm for load balancing, keeping as ROUND_ROBIN, with type STATIC because it is a sidecar and needs to communicate to only one pod always which leads to the reason for keeping the address in socket_address as localhost while port_value is what will be exposed by that particular service’s deployment.

Run:

kubectl apply -f envoy-echo.yaml -n envoy

Deployment of echo-grpc application with 3 replicas. The config contains two containers, one for application and another being the Envoy image.

1apiVersion: apps/v1

2kind: Deployment

3metadata:

4 name: echo-grpc

5spec:

6 replicas: 3

7 selector:

8 matchLabels:

9 app: echo-grpc

10 template:

11 metadata:

12 labels:

13 app: echo-grpc

14 spec:

15 containers:

16 - name: echo-grpc

17 image: <hub-username>/echo-grpc

18 imagePullPolicy: Always

19 resources: {}

20 env:

21 - name: "PORT"

22 value: "8081"

23 ports:

24 - containerPort: 8081

25 readinessProbe:

26 exec:

27 command: ["/bin/grpc_health_probe", "-addr=:8081"]

28 initialDelaySeconds: 1

29 livenessProbe:

30 exec:

31 command: ["/bin/grpc_health_probe", "-addr=:8081"]

32 initialDelaySeconds: 1

33 - name: envoy

34 image: envoyproxy/envoy:v1.9.1

35 resources: {}

36 ports:

37 - name: https

38 containerPort: 443

39 volumeMounts:

40 - name: config

41 mountPath: /etc/envoy

42 volumes:

43 - name: config

44 configMap:

45 name: envoy-echoHere, echo-grpc is test application and envoy is being deployed in the same pod. Config volumes are mounted so that the envoy can read the configmaps.

Run:

kubectl apply -f echo-deployment.yaml -n envoy

Headless Service Configuration

We are using headless service for echo-grpc. Using service as headless will expose the Pods IP to the DNS server of kubernetes which will be used by Envoy to do service discovery for the pods.

echo-service.yaml

1apiVersion: v1

2kind: Service

3metadata:

4 name: echo-grpc

5spec:

6 type: ClusterIP

7 clusterIP: None

8 selector:

9 app: echo-grpc

10 ports:

11 - name: http2-echo

12 protocol: TCP

13 port: 8786

14 - name: http2-service

15 protocol: TCP

16 port: 8081In the above config file, we are exposing two ports, one for envoy sidecar (this is the same port we mentioned in the config map of sidecar envoy) and one for the service itself.

Run:

kubectl apply -f echo-service.yaml -n envoy

Front Envoy Configuration

Creating a service of type LoadBalancer so that client can access the backend service.

envoy-service.yaml:

1apiVersion: v1

2kind: Service

3metadata:

4 name: envoy

5spec:

6 type: LoadBalancer

7 selector:

8 app: envoy

9 ports:

10 - name: https

11 protocol: TCP

12 port: 443

13 targetPort: 443Creating self-signed certificates

Run:

kubectl apply -f envoy-service.yaml -n envoy

Since we are deploying front envoy LoadBalancer on port 443, we have to create a self-signed certificate to make it terminate SSL/TLS connection.

-

Get the external IP:

kubectl describe svc/envoy -n envoy -

Copy the LoadBalancer address in the EXTERNAL-IP section and do a nslookup and copy the IP address:

nslookup <your load balancer aadess> -

Create a self-signed cert and key:

openssl req -x509 -nodes -newkey rsa:2048 -days 365 -keyout privkey.pem -out cert.pem -subj "/CN=<ip-address>" -

Create a Kubernetes TLS Secret called envoy-certs that contains the self-signed SSL/TLS certificate and key:

kubectl create secret tls envoy-certs --key privkey.pem --cert cert.pem --dry-run -o yaml

Edge Envoy configuration

Configuration for the edge Envoy:

envoy-configmap.yaml

1apiVersion: v1

2kind: ConfigMap

3metadata:

4 name: envoy-conf

5data:

6 envoy.yaml: |

7 static_resources:

8 listeners:

9 - address:

10 socket_address:

11 address: 0.0.0.0

12 port_value: 443

13 filter_chains:

14 - filters:

15 - name: envoy.http_connection_manager

16 config:

17 access_log:

18 - name: envoy.file_access_log

19 config:

20 path: "/dev/stdout"

21 codec_type: AUTO

22 stat_prefix: ingress_https

23 route_config:

24 name: local_route

25 virtual_hosts:

26 - name: https

27 domains:

28 - "*"

29 routes:

30 - match:

31 prefix: "/api.Echo/"

32 route:

33 cluster: echo-grpc

34 http_filters:

35 - name: envoy.health_check

36 config:

37 pass_through_mode: false

38 headers:

39 - name: ":path"

40 exact_match: "/healthz"

41 - name: "x-envoy-livenessprobe"

42 exact_match: "healthz"

43 - name: envoy.router

44 config: {}

45 tls_context:

46 common_tls_context:

47 tls_certificates:

48 - certificate_chain:

49 filename: "/etc/ssl/envoy/tls.crt"

50 private_key:

51 filename: "/etc/ssl/envoy/tls.key"

52 clusters:

53 - name: echo-grpc

54 connect_timeout: 0.5s

55 type: STRICT_DNS

56 lb_policy: ROUND_ROBIN

57 http2_protocol_options: {}

58 load_assignment:

59 cluster_name: echo-grpc

60 endpoints:

61 - lb_endpoints:

62 - endpoint:

63 address:

64 socket_address:

65 address: echo-grpc.envoy.svc.cluster.local

66 port_value: 8786

67 health_checks:

68 timeout: 1s

69 interval: 10s

70 unhealthy_threshold: 2

71 healthy_threshold: 2

72 grpc_health_check: {}

73 admin:

74 access_log_path: "/dev/stdout"

75 address:

76 socket_address:

77 address: 127.0.0.1

78 port_value: 8090Since we will be offloading HTTPS, we are using port_value of 443. Most of the configurations are same as of sidecar envoy except for three things:

- A tls_context config is required to mention the tls certifications needed for authentication purposes.

- In clusters, the type has been to STATIC to STRICT_DNS which is a kind of service discovery mechanism making use of Headless service we deployed earlier.

- The socket_address’s address value has been changed to the FQDN of the service.

Run:

kubectl apply -f envoy-configmap.yaml -n envoy

Deployment Configuration

envoy-deployment.yaml

1apiVersion: apps/v1

2kind: Deployment

3metadata:

4 name: envoy

5spec:

6 replicas: 2

7 selector:

8 matchLabels:

9 app: envoy

10 template:

11 metadata:

12 labels:

13 app: envoy

14 spec:

15 containers:

16 - name: envoy

17 image: envoyproxy/envoy:v1.9.1

18 resources: {}

19 ports:

20 - name: https

21 containerPort: 443

22 volumeMounts:

23 - name: config

24 mountPath: /etc/envoy

25 - name: certs

26 mountPath: /etc/ssl/envoy

27 readinessProbe:

28 httpGet:

29 scheme: HTTPS

30 path: /healthz

31 httpHeaders:

32 - name: x-envoy-livenessprobe

33 value: healthz

34 port: 443

35 initialDelaySeconds: 3

36 livenessProbe:

37 httpGet:

38 scheme: HTTPS

39 path: /healthz

40 httpHeaders:

41 - name: x-envoy-livenessprobe

42 value: healthz

43 port: 443

44 initialDelaySeconds: 10

45 volumes:

46 - name: config

47 configMap:

48 name: envoy-conf

49 - name: certs

50 secret:

51 secretName: envoy-certsRun:

kubectl apply -f envoy-deployment.yaml -n envoy

Testing

Proto file for the echo-grpc service:

ccho.proto:

1syntax = "proto3";

2package api;

3service Echo {

4rpc Echo (EchoRequest) returns (EchoResponse) {}

5}

6message EchoRequest {

7string content = 1;

8}

9message EchoResponse {

10string content = 1;

11}Run the following command to call the server:

grpcurl -d '{"content": "echo"}' -proto echo.proto -insecure -v <load_balancer_or_external_ip>:443 api.Echo/Echo

The output will be similar to something like this:

1Resolved method descriptor:

2rpc Echo ( .api.EchoRequest ) returns ( .api.EchoResponse );

3Request metadata to send:

4(empty)

5Response headers received:

6content-type: application/grpc

7date: Wed, 27 Feb 2019 04:40:19 GMT

8hostname: echo-grpc-5c4f59c578-wcsvr

9server: envoy

10x-envoy-upstream-service-time: 0

11Response contents:

12{

13"content": "echo"

14}

15Response trailers received:

16(empty)

17Sent 1 request and received 1 responseRun the above command multiple times and check the value of the hostname field every time which will contain the pod name of one of the 3 pods deployed.