What Is Agent Communication in AI Systems?

AI agent communication refers to the structured exchange of intent, context, authority, and outcomes between autonomous agents, tools, services, and platforms. Unlike traditional API calls between deterministic services, agent communication involves reasoning, delegated authority, and dynamic decision-making.

As organizations move from isolated AI agents to interconnected agent ecosystems, communication becomes the most critical control layer. It determines how tasks are delegated, how authority is transferred, and how trust is enforced across systems.

Agent communication is not just a networking problem. It is fundamentally an identity and governance problem.

This guide explains what an agent mesh is, how AI agents communicate, which protocols are emerging, and how identity-centric trust models enable safe collaboration at scale.

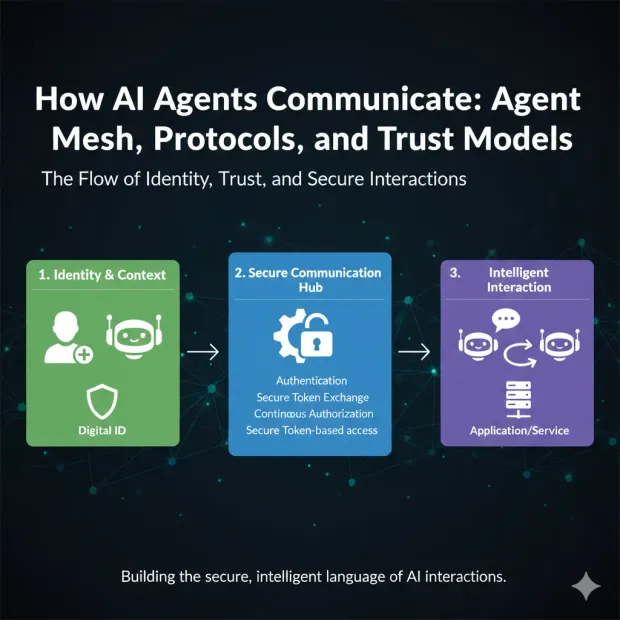

How AI Agents Communicate

AI agents communicate through structured, identity-bound exchanges of intent, context, and delegated authority within an agent mesh. Unlike traditional service communication, agent interactions are autonomous, goal-driven, and often involve authority transfer.

Securing agent communication requires:

-

Identity verification at every interaction

-

Explicit delegation semantics

-

Policy-enforced boundaries

-

Continuous trust evaluation

Agent meshes, standardized communication protocols, and identity-centric trust models are essential to scaling agentic systems safely.

Why Agent Communication Is a First-Class Architectural Problem

In traditional software systems, communication is predictable. Services call APIs. Authentication is static. Authorization follows predefined rules. Requests are deterministic.

Agentic systems operate differently.

AI agents communicate to delegate tasks, coordinate decisions, share context or memory, negotiate responsibilities, and chain multi-step actions across systems. Each interaction may include reasoning and interpretation of intent.

If communication is not governed, risk multiplies across the ecosystem. A single over-permissive delegation or implicit trust assumption can propagate through interconnected agents.

Agent communication is therefore about control, not just connectivity.

What Is an Agent Mesh?

An agent mesh is a distributed communication fabric that connects multiple autonomous agents, tools, and services into a coordinated system.

Unlike point-to-point calls, an agent mesh enables:

-

Dynamic agent discovery

-

Cross-system task delegation

-

Context-aware collaboration

-

Authority transfer across boundaries

-

Continuous operation across sessions

In an agent mesh, no single agent has complete visibility. Trust must be explicitly established and continuously enforced.

Why Traditional Service Meshes Are Not Enough

Service meshes secure transport and manage traffic between microservices. They provide routing, mutual TLS, load balancing, and observability.

However, service meshes assume deterministic services and fixed APIs.

AI agents operate differently. They make intent-based requests, engage in multi-step reasoning, and may modify or reinterpret goals. Authority can be delegated dynamically. Context evolves during execution.

Transport-level security does not validate whether an agent should act on a request. Agent communication requires semantic trust layered on top of transport security.

How Agent-to-Agent (A2A) Communication Works

Agent-to-agent communication occurs when one autonomous agent directly interacts with another to request a task, delegate authority, or exchange contextual information.

A typical A2A interaction includes:

-

Intent declaration

-

Context transfer

-

Authority scoping

-

Policy evaluation

-

Outcome delivery or further delegation

Each of these steps has governance implications. Identity must be verified. Authority must be validated. Context must be bounded.

Without these controls, delegation becomes uncontrolled privilege escalation.

The Risk of Implicit Trust Between Agents

Implicit trust occurs when agents assume other agents are safe without verification, honor requests without policy checks, or transfer authority informally.

In distributed agent systems, implicit trust leads to:

-

Privilege amplification

-

Loss of accountability

-

Cascading failures

-

Cross-tenant risk

Safe agent architectures enforce Zero Trust principles. Every interaction must be identity-bound, policy-evaluated, and context-aware.

Trust must be continuously verified, not assumed.

Protocols for Secure Agent Communication

As agent ecosystems mature, standardized communication protocols are emerging. These protocols define how agents express intent, encode delegation, bind identity, and preserve context boundaries.

Agent communication protocols must:

-

Be identity-aware

-

Encode delegation semantics

-

Preserve contextual integrity

-

Enable auditability and replay

Ad-hoc messaging cannot scale safely. Explicit protocols replace informal trust with enforceable boundaries.

Model Context Protocol (MCP)

The Model Context Protocol defines how agents exchange contextual information with models and tools.

MCP addresses:

-

What context an agent may access

-

How context is structured

-

How boundaries are enforced

Improper context handling introduces security risks such as instruction injection or data overexposure. Secure MCP implementation requires strict scoping, validation, and identity-bound enforcement.

Agent Communication Protocol (ACP)

The Agent Communication Protocol standardizes how agents communicate with each other.

ACP ensures that requests include:

-

Explicit identity binding

-

Clear intent declaration

-

Scoped authority

-

Defined expected outcomes

By enforcing structured exchanges, ACP prevents blind acceptance of requests. Every action must pass identity and policy validation.

Delegated Authorization in Agent Systems

Delegated authorization allows one agent to act on behalf of a user or another agent within defined constraints.

Safe delegation must be:

-

Explicit

-

Scoped

-

Time-bound

-

Auditable

-

Revocable

Protocols that fail to encode delegation semantics create hidden authority transfer, increasing systemic risk.

Delegation without identity governance undermines accountability.

Identity as the Foundation of Agent Trust

Trust in agent communication is contextual. Agents must evaluate:

-

Who is making the request

-

Under what authority

-

For what purpose

-

Within which constraints

Without identity, requests cannot be attributed. Authority cannot be validated. Decisions cannot be explained.

Identity is the anchor that makes autonomous systems governable.

Trust Models for Agent Communication

Several trust models apply to agent ecosystems.

-

Zero Trust requires verification of every request, even between internal agents.

-

Policy-based trust derives authorization from explicit rules rather than static relationships.

-

Contextual trust incorporates runtime signals such as data sensitivity and environmental risk.

-

Delegation-aware trust evaluates chains of authority rather than direct identity alone.

-

Most production-grade agent systems combine these models.

Cross-System and Cross-Agent Trust

Agent communication frequently crosses organizational boundaries.

Internal agents may interact with vendor agents or multi-tenant ecosystems. These interactions introduce differing identity providers, policy models, and risk tolerances.

Federated identity frameworks and standardized communication protocols become essential to maintain trust consistency across domains.

API Gateways as Policy Enforcement Points

API gateways enforce identity validation and policy checks for agent interactions.

In agent ecosystems, gateways:

-

Validate non-human identity

-

Enforce scope and intent

-

Apply rate limits

-

Block unauthorized actions

-

Log interactions for forensic analysis

Gateways prevent agents from bypassing identity controls when invoking tools or services.

Tool Invocation as Governed Communication

When an agent invokes a tool or API, it participates in structured communication.

Tool invocation must be governed through:

-

Approved tool catalogs

-

Identity-bound access control

-

Scoped authorization

-

Full audit logging

Unrestricted tool access creates the fastest path to systemic abuse.

Preventing Cascading Failures in Agent Meshes

In an interconnected agent mesh, failures propagate quickly.

A compromised agent can delegate unsafe tasks, trigger additional agents, and amplify impact across systems.

Mitigation requires:

-

Blast-radius controls

-

Per-agent scope limits

-

Kill switches

-

Continuous runtime monitoring

-

Identity-bound trust boundaries

Containment is as important as detection.

Observability and Forensics in Agent Communication

Agent communication must be observable to be governable.

Essential observability components include:

-

Message logs

-

Identity context

-

Delegation chains

-

Policy decisions

-

Execution outcomes

Observability enables incident investigation, compliance reporting, decision replay, and system improvement.

Opaque agent meshes cannot scale safely.

Why LoginRadius Is Foundational for Agent Trust

LoginRadius provides centralized identity governance, fine-grained authorization, lifecycle management, API-first architecture, and support for non-human identities.

These capabilities allow organizations to build agent meshes where communication is identity-verified, scoped, explainable, and auditable.

Agent autonomy without identity governance creates risk. Identity-centric control enables safe scale.

Final Takeaway

AI agents communicate through structured exchanges of intent, context, and delegated authority.

Securing this communication requires:

-

Agent meshes with explicit trust boundaries

-

Standardized communication protocols

-

Identity-centric trust models

-

Continuous policy enforcement

In an agentic world, communication drives action. Identity governs that power.

FAQs

What is an agent mesh?

An agent mesh is a distributed network of autonomous agents that dynamically communicate, delegate tasks, and coordinate decisions across systems using identity-bound trust.

How do AI agents communicate with each other?

AI agents communicate through structured, protocol-based exchanges of intent, context, and delegated authority that are validated through identity and policy controls.

Why are trust models important for agent communication?

Because agents act autonomously, trust must be continuously verified to prevent privilege escalation, authority abuse, and cascading risk across the ecosystem.

Are service meshes sufficient for AI agents?

No. Service meshes secure transport-level traffic, but agent meshes must secure intent, authority delegation, and contextual decision-making.