Big Data - Testing Strategy

With the exponential growth in the number of big data applications in the world, Testing in big data applications is related to database, infrastructure and performance testing and functional testing. This blog guides what should be the strategy for testing Big Data applications.

Table of Contents

- Big Data - Introduction

- Big Data Testing

- Big Data Testing - Test Data

- Big Data Testing - Test Environment

- Big Data - Performance Testing

- Tools used in Big Data Scenarios

- Big Data Testing - Challenges

- Conclusion

Big Data - Introduction

Big Data - Introduction

Earlier, we were only dealing with well-structured data, hosted in large data warehouses, and investing a cost in maintaining those data warehouses and hiring expert professional to maintain and secure information hosted in that data warehouse. Data was structured and can be queried anything as per the needs. But now, this exponential growth of data generates a new vision for data science along with some major challenges.

Big Data is something which is growing exponentially with time, and carry raw but very valuable information inside that can change the future of any enterprise. It is a collection, which represents a large dataset, may be collected from multiple sources, or stored in an organization. Let‟s understand a real-time example, Big companies like Ikea and Amazon are leveraging the benefit of big data by collecting data from customer‟s buying patterns at their stores, their internal stock information, and their inventory demand-supply relations and analyze all, in seconds even in real-time to add value to its customer experience.

So, extracting information from a large dataset somewhat calls a concept of Data mining which is an analytic process originally designed to explore large datasets. The ultimate goal of data mining is to search for consistency in a pattern or systematic relationship between variables, which helps in predicting the next pattern or behavior.

Now, if we take concepts of data mining forward along with large data set, to some extent it becomes a blocker for our existing approach, because big data may contain structured or unstructured data even it may contain data in multiple formats also.

Big Data Testing

Testing is an art of achieving quality in your software product, in terms of perfection of functionality, performance, user experience, or usability. But for big data testing, you need to keep your focus more on the functional and performance aspects of an application. Performance is the key parameter in any big data application which is meant to process terabytes of data. Successful processing of terabytes of data using a commodity cluster with a number of other supportive components needs to be verified. Processing should be faster and accurate which demands a high level of testing.

Processing may be of three types:–

And based on which, we need to integrate different components along with NoSQL data store as per the needs.

Big Data Testing - Test Data

Data plays a vital role in the testing of big data applications. Application is meant to process data and provide an expected output based on implemented logic. The logic needs to be verified before moving to production, as the implementation of logic is completely based on business requirements and data.

1. Test Data Quality

Good quality test data is as important as the test environment. In the big data world, data can have any format or size, it may be in the form of a document, XML, JSON, PDF, etc. at the same time data size may go up to terabytes of petabytes. Hence, test data should also have multiple formats and size should be large enough to ensure the handling of large data processing. In big data testing, it needs data with logical values as per the application requirement and format which is supported by the application.

Along with it, data quality is another aspect of big data testing. Ensuring the quality of data before processing through application ensures the accuracy of the final output. Data quality testing itself is a huge domain and covers a lot of best practices which include – data completeness, conformity, accuracy, validity, duplication, and consistency, etc. It should be included in the big data testing and this ensures the level of accuracy application is supposed to provide.

2. Test Data Generation

The generation of test data is again a challenging job, there are multiple parameters, which have to be taken care of while generating test data. It needs a tool, which can help to generate data and should have functions or logic can also be applied over it. Tools like Talend (an open studio) is the best candidate to fulfill the requirements of data generation.

3. Data Storage

After the generation of test data along with quality, it needs to host on a file system. For testing big data applications, data should be stored in the system similar to the production environment. As we are working in big data space, there should have a different number of nodes, and data must be in a distributed environment.

Big Data Testing - Test Environment

In Big data testing, the test environment should be efficient enough to process a large amount of data as done in the case of a production environment. Real-time production environment clusters generally have 30-40 nodes of cluster and data is distributed on the cluster nodes. There must have some minimum configuration for each node used in the cluster. A cluster may have two modes, in-premise or cloud. For testing in big data, it needs the same kind of environment with some minimum configuration of node.

Scalability is also desired to be there in the test environment of big data testing, it helps to study the performance of application with the increase in the number of resources. That data can be used to define SLA (service level agreement) for that particular application.

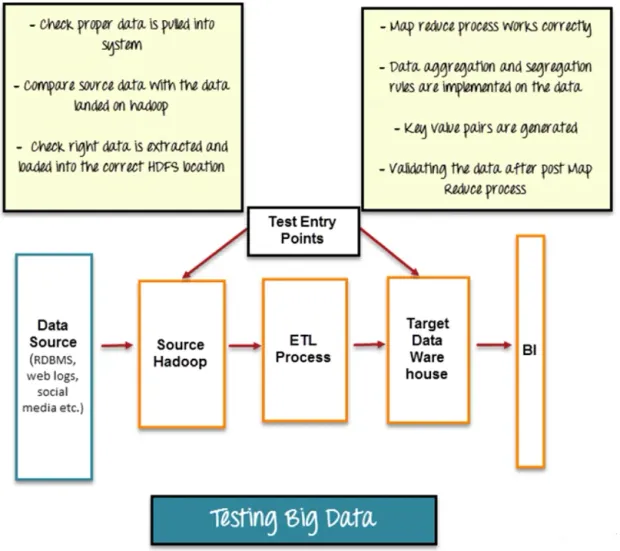

Big Data Testing can be categorized into three stages:

Step 1: Data Staging Validation

The first stage of big data testing, also known as a Pre-Hadoop stage, is comprised of process validation.

- Validation of data is very important so that the data collected from various source like RDBMS, weblogs, etc are verified and then added to the system.

- To ensure data match you should compare source data with the data added to the Hadoop system.

- Make sure that the right data is taken out and loaded into the accurate HDFS location

Step 2: “Map Reduce” Validation

Validation of “Map Reduce” is the second stage. Business logic validation on every node is performed by the tester. Post that authentication is done by running them against multiple nodes, to make sure that the:

- The process of Map Reduce works perfectly.

- On the data, the data aggregation or segregation rules are imposed.

- Creation of key-value pairs is there.

- After the Map-Reduce process, Data validation is done.

Step 3: Output Validation Phase

The output validation process is the final or third stage involved in big data testing. The output data files are created and they are ready to be moved to an EDW (Enterprise Data Warehouse) or any other such system as per requirements. The third stage consisted of:

- Checking on the transformation rules is accurately applied.

- In the target system, it needs to ensure that data is loaded successfully and the integrity of data is maintained.

- By comparing the target data with the HDFS file system data, it is checked that there is no data corruption.

Big Data - Performance Testing

Big data applications are meant to process a large amount of data, and it is expected that it should take minimum time to process maximum data. Along with it, application jobs should consume a considerable amount of memory and CPU. In big data testing, performance parameter plays an important role and helps to define SLA's. It covers the performance of the base machine and cluster. Also, for example, In the case of Hadoop, map-reduce jobs should be written with proper coding guidelines, to perform better in the production environment. Profiling can also be done on map-reduce jobs before integration, to ensure their optimized execution.

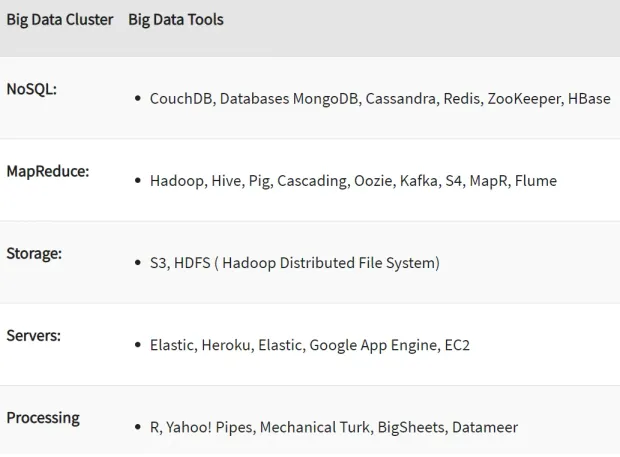

Tools used in Big Data Scenarios

Big Data Testing - Challenges

In big data testing, certain challenges are involved which needs to be addressed by the big data testing approach.

1. Test Data

Exponential growth had been observed in the growth of data in the last few years. A huge amount of data are being generated daily and stored in large data centers or data marts. So, there is a demand for efficient storage and a way to process it in an optimized way. If we consider the telecom industry, it generates a large number of call logs daily and they need to be processed for better customer experience and compete in the market. The same goes with the test data, test data should be similar to production data and should contain all the logically acceptable fields in it.

This becomes a challenge for testing big data application, generating test data similar to production data is a real challenge. Test data should also be large enough to verify proper working big data application.

2. Environment

The processing of data highly depends on the environment and its performance. An optimized environment setup gives high performance and fast data processing results. Distributed computing is used for the processing of big data which has data hosted in a distributed environment. The testing environment should have multiple numbers of nodes and data should be distributed over the nodes. At the same time, it also needs to monitor those nodes, to ensure the highest performance with minimum CPU and memory utilization. Nodes should be monitored and there should have a graphical presentation of node performance. So, the test environment has two aspects – distributed nodes and their monitoring, which should be covered in the testing approach.

3. Performance

Performance is the key requirement of any big data application, and of course because of which enterprises are moving towards NoSQL technologies, technologies that can handle their big data and process in the minimum time frame. A large dataset should be processed in a minimum considerable time frame. In big data testing, performance testing is a challenge, it requires monitoring of cluster nodes during execution and also time is taken for every iteration of execution.

Conclusion

Big Data is the trend that is revolutionizing society and its organizations due to the capabilities it provides to take advantage of a wide variety of data, in large volumes and with speed. Keeping the challenges in mind we have defined the approach of testing big data applications. This approach of big data testing will make it easy for a test engineer to verify and certify the business requirement implementations and for stack holders, it saves a huge amount of cost, which has to be invested to get the expected business returns.

Featured Posts

TOTP Authentication Explained: How It Works, Why It’s Secure

Advantages of Time-Based One-Time Passwords (TOTP)

JWT Authentication with LoginRadius: Quick Integration Guide

Complete Guide to JSON Web Token (JWT) and How It Works

A comprehensive guide to OAuth 2.0

How Chrome’s Third-Party Cookie Restrictions Affect User Authentication?

How to Implement OpenID Connect (OIDC) SSO with LoginRadius?

Testing Brute-force Lockout with LoginRadius

Breaking Down the Decision: Why We Chose AWS ElastiCache Over Redis Cloud

LoginRadius Launches a CLI for Enterprise Dashboard

How to Implement JWT Authentication for CRUD APIs in Deno

Multi-Factor Authentication (MFA) with Redis Cache and OTP

Introduction to SolidJS

Why We Re-engineered LoginRadius APIs with Go?

Why B2B Companies Should Implement Identity Management

Top 10 Cyber Threats in 2022

Build a Modern Login/Signup Form with Tailwind CSS and React

M2M Authorization: Authenticate Apps, APIs, and Web Services

Implement HTTP Streaming with Node.js and Fetch API

NestJS: How to Implement Session-Based User Authentication

How to Integrate Invisible reCAPTCHA for Bot Protection

How Lapsus$ Breached Okta and What Organizations Should Learn

NestJS User Authentication with LoginRadius API

How to Authenticate Svelte Apps

How to Build Your Github Profile

Why Implement Search Functionality for Your Websites

Flutter Authentication: Implementing User Signup and Login

How to Secure Your LoopBack REST API with JWT Authentication

When Can Developers Get Rid of Password-based Authentication?

4 Ways to Extend CIAM Capabilities of BigCommerce

Node.js User Authentication Guide

Your Ultimate Guide to Next.js Authentication

Local Storage vs. Session Storage vs. Cookies

How to Secure a PHP API Using JWT

React Security Vulnerabilities and How to Fix/Prevent Them

Cookie-based vs. Cookieless Authentication: What’s the Future?

Using JWT Flask JWT Authentication- A Quick Guide

Single-Tenant vs. Multi-Tenant: Which SaaS Architecture is better for Your Business?

Build Your First Smart Contract with Ethereum & Solidity

What are JWT, JWS, JWE, JWK, and JWA?

How to Build an OpenCV Web App with Streamlit

32 React Best Practices That Every Programmer Should Follow

How to Build a Progressive Web App (PWA) with React

Bootstrap 4 vs. Bootstrap 5: What is the Difference?

JWT Authentication — Best Practices and When to Use

What Are Refresh Tokens? When & How to Use Them

How to Participate in Hacktoberfest as a Maintainer

How to Upgrade Your Vim Skills

Hacktoberfest 2021: Contribute and Win Swag from LoginRadius

How to Implement Role-Based Authentication with React Apps

How to Authenticate Users: JWT vs. Session

How to Use Azure Key Vault With an Azure Web App in C#

How to Implement Registration and Authentication in Django?

11 Tips for Managing Remote Software Engineering Teams

One Vision, Many Paths: How We’re Supporting freeCodeCamp

C# Init-Only Setters Property

Content Security Policy (CSP)

Implementing User Authentication in a Python Application

Introducing LoginRadius CLI

Add Authentication to Play Framework With OIDC and LoginRadius

React renderers, react everywhere?

React's Context API Guide with Example

Implementing Authentication on Vue.js using JWTtoken

How to create and use the Dictionary in C#

What is Risk-Based Authentication? And Why Should You Implement It?

React Error Boundaries

Data Masking In Nginx Logs For User Data Privacy And Compliance

Code spliting in React via lazy and suspense

Implement Authentication in React Applications using LoginRadius CLI

What is recoil.js and how it is managing in react?

How Enum.TryParse() works in C#

React with Ref

Implement Authentication in Angular 2+ application using LoginRadius CLI in 5 mins

How Git Local Repository Works

How to add SSO for your WordPress Site!

Guide to Authorization Code Flow for OAuth 2.0

Introduction to UniFi Ubiquiti Network

The Upcoming Future of Software Testers and SDETs in 2021

Why You Need an Effective Cloud Management Platform

What is Adaptive Authentication or Risk-based Authentication?

Top 9 Challenges Faced by Every QA

Top 4 Serverless Computing Platforms in 2021

QA Testing Process: How to Deliver Quality Software

How to Create List in C#

What is a DDoS Attack and How to Mitigate it

How to Verify Email Addresses in Google Sheet

Concurrency vs Parallelism: What's the Difference?

35+ Git Commands List Every Programmer Should Know

How to do Full-Text Search in MongoDB

What is API Testing? - Discover the Benefits

The Importance of Multi-Factor Authentication (MFA)

Optimize Your Sign Up Page By Going Passwordless

Image Colorizer Tool - Kolorizer

PWA vs Native App: Which one is Better for you?

How to Deploy a REST API in Kubernetes

Integration with electronic identity (eID)

How to Work with Nullable Types in C#

Git merge vs. Git Rebase: What's the difference?

How to Install and Configure Istio

How to Perform Basic Query Operations in MongoDB

Invalidating JSON Web Tokens

How to Use the HTTP Client in GO To Enhance Performance

Constructor vs getInitialState in React

Web Workers in JS - An Introductory Guide

How to Use Enum in C#

How to Migrate Data In MongoDB

A Guide To React User Authentication with LoginRadius

WebAuthn: A Guide To Authenticate Your Application

Build and Push Docker Images with Go

Istio Service Mesh: A Beginners Guide

How to Perform a Git Force Pull

NodeJS Server using Core HTTP Module

How does bitwise ^ (XOR) work?

Introduction to Redux Saga

React Router Basics: Routing in a Single-page Application

How to send emails in C#/.NET using SMTP

How to create an EC2 Instance in AWS

How to use Git Cherry Pick

Password Security Best Practices & Compliance

Using PGP Encryption with Nodejs

Python basics in minutes

Automating Rest API's using Cucumber and Java

Bluetooth Controlled Arduino Car Miniature

AWS Services-Walkthrough

Beginners Guide to Tweepy

Introduction to Github APIs

Introduction to Android Studio

Login Screen - Tips and Ideas for Testing

Introduction to JAMstack

A Quick Look at the React Speech Recognition Hook

IoT and AI - The Perfect Match

A Simple CSS3 Accordion Tutorial

EternalBlue: A retrospective on one of the biggest Windows exploits ever

Setup a blog in minutes with Jekyll & Github

What is Kubernetes? - A Basic Guide

Why RPA is important for businesses

Best Hacking Tools

Three Ways to do CRUD Operations On Redis

Traversing the realms of Quantum Network

How to make a telegram bot

iOS App Development: How To Make Your First App

Apache Beam: A Basic Guide

Python Virtual Environment: What is it and how it works?

End-to-End Testing with Jest and Puppeteer

Speed Up Python Code

Build A Twitter Bot Using NodeJS

Visualizing Data using Leaflet and Netlify

STL Containers & Data Structures in C++

Secure Enclave in iOS App

Optimal clusters for KMeans Algorithm

Upload files using NodeJS + Multer

Class Activation Mapping in Deep Learning

Full data science pipeline implementation

HTML Email Concept

Blockchain: The new technology of trust

Vim: What is it and Why to use it?

Virtual Dispersive Networking

React Context API: What is it and How it works?

Breaking down the 'this' keyword in Javascript

Handling the Cheapest Fuel- Data

GitHub CLI Tool ⚒

Lazy loading in React

What is GraphQL? - A Basic Guide

Exceptions and Exception Handling in C#

Unit Testing: What is it and why do you need it?

Golang Maps - A Beginner’s Guide

LoginRadius Open Source For Hacktoberfest 2020

JWT Signing Algorithms

How to Render React with optimization

Ajax and XHR using plain JS

Using MongoDB as Datasource in GoLang

Understanding event loop in JavaScript

LoginRadius Supports Hacktoberfest 2020

How to implement Facebook Login

Production Grade Development using Docker-Compose

Web Workers: How to add multi-threading in JS

Angular State Management With NGXS

What's new in the go 1.15

Let’s Take A MEME Break!!!

PKCE: What it is and how to use it with OAuth 2.0

Big Data - Testing Strategy

Email Verification API (EVA)

Implement AntiXssMiddleware in .NET Core Web

Setting Up and Running Apache Kafka on Windows OS

Getting Started with OAuth 2.0

Best Practice Guide For Rest API Security | LoginRadius

Let's Write a JavaScript Library in ES6 using Webpack and Babel

Cross Domain Security

Best Free UI/UX Design Tools/Resources 2020

A journey from Node to GoLang

React Hooks: A Beginners Guide

DESIGN THINKING -A visual approach to understand user’s needs

Deep Dive into Container Security Scanning

Different ways to send an email with Golang

Snapshot testing using Nightwatch and mocha

Qualities of an agile development team

IAM, CIAM, and IDaaS - know the difference and terms used for them

How to obtain iOS application logs without Mac

Benefits and usages of Hosts File

React state management: What is it and why to use it?

HTTP Security Headers

Sonarqube: What it is and why to use it?

How to create and validate JSON Web Tokens in Deno

Cloud Cost Optimization in 2021

Service Mesh with Envoy

Kafka Streams: A stream processing guide

Self-Hosted MongoDB

Roadmap of idx-auto-tester

How to Build a PWA in Vanilla JS

Password hashing with NodeJS

Introduction of Idx-Auto-Tester

Twitter authentication with Go Language and Goth

Google OAuth2 Authentication in Golang

LinkedIn Login using Node JS and passport

Read and Write in a local file with Deno

Build A Simple CLI Tool using Deno

Create REST API using deno

Automation for Identity Experience Framework is now open-source !!!

Creating a Web Application using Deno

Hello world with Deno

Facebook authentication using NodeJS and PassportJS

StackExchange - The 8 best resources every developer must follow

OAuth implementation with Node.js and Github

NodeJS and MongoDB application authentication by JWT

Working with AWS Lambda and SQS

Google OAuth2 Authentication in NodeJS - A Guide to Implementing OAuth in Node.js

Custom Encoders in the Mongo Go Driver

React's Reconciliation Algorithm

NaN in JavaScript: An Essential Guide

SDK Version 10.0.0

Getting Started with gRPC - Part 1 Concepts

Introduction to Cross-Site Request Forgery (CSRF)

Introduction to Web Accessibility with Semantic HTML5

JavaScript Events: Bubbling, Capturing, and Propagation

3 Simple Ways to Secure Your Websites/Applications

Failover Systems and LoginRadius' 99.99% Uptime

A Bot Protection Overview

OAuth 1.0 VS OAuth 2.0

Azure AD as an Identity provider

How to Use JWT with OAuth

Let's Encrypt with SSL Certificates

Encryption, Hashing & Salting: Your Guide to Secure Data

What is JSON Web Token

Understanding JSONP

Using NuGet to publish .NET packages

How to configure the 'Actions on Google' console for Google Assistant

Creating a Google Hangout Bot with Express and Node.js

Understanding End Of Line: The Power of Newline Characters

Cocoapods : What It Is And How To Install?

Node Package Manager (NPM)

Get your FREE SSL Certificate!

jCenter Dependencies in Android Studio

Maven Dependency in Eclipse

Install Bootstrap with Bower

Open Source Business Email Validator By Loginradius

Know The Types of Website Popups and How to Create Them

Javascript tips and tricks to Optimize Performance

Learn How To Code Using The 10 Cool Websites

Personal Branding For Developers: Why and How?

Wordpress Custom Login Form Part 1

Is Your Database Secured? Think Again

Be More Manipulative with Underscore JS

Extended LinkedIn API Usage

Angular Roster Tutorial

How to Promise

Learning How to Code

Delete a Node, Is Same Tree, Move Zeroes

CSS/HTML Animated Dropdown Navigation

Part 2 - Creating a Custom Login Form

Website Authentication Protocols

Nim Game, Add Digits, Maximum Depth of Binary Tree

The truth about CSS preprocessors and how they can help you

Beginner's Guide for Sublime Text 3 Plugins

Displaying the LoginRadius interface in a pop-up

Optimize jQuery & Sizzle Element Selector

Maintain Test Cases in Excel Sheets

Separate Drupal Login Page for Admin and User

How to Get Email Alerts for Unhandled PHP Exceptions

ElasticSearch Analyzers for Emails

Social Media Solutions

Types of Authentication in Asp.Net

Using Facebook Graph API After Login

Hi, My Name is Darryl, and This is How I Work

Beginner's Guide for Sublime Text 3

Social Network Branding Guidelines

Index in MongoDB

How to ab-USE CSS2 sibling selectors

Customize User Login, Register and Forgot Password Page in Drupal 7

Best practice for reviewing QQ app

CSS3 Responsive Icons

Write a highly efficient python Web Crawler

Memcached Memory Management

HTML5 Limitation in Internet Explorer

What is an API

Styling Radio and Check buttons with CSS

Configuring Your Social Sharing Buttons

Shopify Embedded App

API Debugging Tools

Use PHP to generate filter portfolio

Password Security

Loading spinner using CSS

RDBMS vs NoSQL

Cloud storage vs Traditional storage

Getting Started with Phonegap

Animate the modal popup using CSS

CSS Responsive Grid, Re-imagined

An Intro to Curl & Fsockopen

Enqueuing Scripts in WordPress

How to Implement Facebook Social Login

GUID Query Through Mongo Shell

Integrating LinkedIn Social Login on a Website

Social Provider Social Sharing Troubleshooting Resources

Social Media Colors in Hex

W3C Validation: What is it and why to use it?

A Simple Popup Tutorial

Hello developers and designers!